Scraping the Reddit forum WallStreetBets (WSBs) with Python couldn’t be easier. What’s more, we can even analyse the sentiment of posts using the NLTK Python library.

The most common methods of getting data from Reddit are (i) using the official Reddit API, and (ii) scraping. Both methods have pros and cons; whilst the API imposes rate limits, it is simpler than scraping.

Although this article focuses on using the API, if you choose to scrape Reddit instead make sure to use “Old Reddit” instead of the newer homepage.

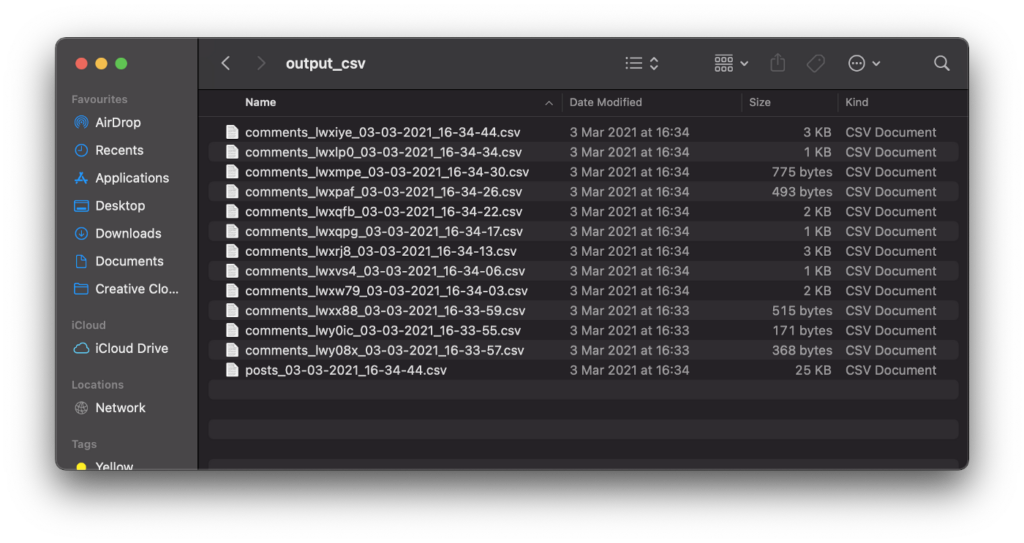

At a high-level, the code outlined in this article: (i) downloads the most recent posts from WSBs, (ii) loads all of the associated comments, (iii) extracts tickers from both posts and comments, and (iv) determines the sentiment. From this, the most common tickers and the sum sentiment associated with each post is outputted as a series of CSVs.

Full code

Before we dive in to aspects of the code, note that this isn’t a comprehensive coding tutorial; the full code can be found on my GitHub. Rather, I’m going to outline the main aspects of the code in the hope it will assist you in modifying the code to fit your use case — it could even be applied to an entirely different subreddit.

How to use the Reddit API

On the whole, the Reddit API is very easy to use. To get all of the data we need we can use simple GET HTTP requests.

We need to make two separate calls to the API – the first to get the most recent posts, and the second to get the comments (or “replies”) on each post. All of the API endpoints are outlined in the Reddit API documentation.

example there are three functions: request_data(), get_recent_posts(), and get_post_comments().

request_data() is used to download the content of the URL using the Python Requests library.

Pay particular attention to the user-agent portion of the headers variable. Reddit ask that users don’t use generic user-agents (such as ‘Mozilla/5.0’) when accessing the API, and threaten to block individuals who do. Instead, you should use a custom user-agent taking the form <platform>:<app ID>:<version string>

get_recent_posts() formulates a URL to grab the most recent posts from the subreddit. The URL can return between 1 and 100 results depending on the users requirements (specified using the count variable). The URL is then fed to request_data() in the ultimate line, and the result returned as JSON.

get_post_comments() is similar to get_recent_posts() in that it constructs a URL which is fed to request_data() to retrieve data from the Reddit API (as JSON). The URL constructed by get_post_comments() returns (almost*) all of the comments associated with that post.

*Whilst the comment endpoint returns many comments, when Reddit determines there are too many comments to return at once it holds back a portion. These must then be accessed using a different POST endpoint. As we’re only interested in gaining a broad understanding of sentiment, these additional comments are ignored.

Using Reddit API JSON data

The JSON returned from calls to the posts and comments Reddit API endpoints are nested. This means that we need to loop over the data. However, extracting all of the important information from the comments JSON is much more challenging than utilising data on posts.

To work with the posts data we only need a method, and an additional loop.

Above, you can see that we make a call to get_recent_posts(), and then process subsections of that JSON using the parse_post() method. The parse_post() method extracts only the elements we are interested in, and returns the values (along with a key) as a Python dictionary.

As previously stated, handling the comments is more complicated – rather than using a single method to parse the data, we rely on a set of methods.

Extracting tickers from WallStreetBets in Python

Tickers are important to our application as they tell us which stocks are being discussed. When considered alongside our assessment of sentiment (outlined shortly) they can advise us which stocks to research.

Before we can do anything we need a list of tickers to work with. Fortunately Nasdaq provide CSVs containing tickers for all of the major American exchanges. Download CSVs for the exchanges you’re interested in from Nasdaq.

Once the CSVs are downloaded to a local directory we need to load a single column from each of them into our code — the rest can be discarded. The following Ticker class handles all aspects of ticker identification, from CSV loading to entry matching.

load_tickers() is used to load in our CSV files, and convert them to a Python list.

To match and extract any tags in a given string we call check_for_tickers(), providing both a list of tickers (sourced from the Nasdaq CSVs), and the string we want to check for tickers.

Whilst most of the code is self-explanatory, the banned_tags list deserves a comment. Owing to their short length and alphabetical nature, tickers can sometimes take the form of words or acronyms. Take “YOLO”, which is both an acronym and a cannabis ETF, or “GOOD” which represents Gladstone Commercial Corporation.

Getting the sentiment of WallStreetBet posts using NLTK and Python

The Natural Language Toolkit (NLTK) is “a suite of libraries and programs for symbolic and statistical natural language processing”. By applying the library to Reddit posts and comments, we can rapidly get an idea of sentiment.

Before we look at the code, it’s worth noting that the model included in the code is not optimised for use on WSBs, or financial information generally. Rather, the model was designed to assess the sentiment of Tweets. If you’re looking to improve on the code posted on Github, then altering the sentiment analysis portion would be a great place to start.

When the user inputs a string to the NLTK model a “positive” or “negative” result is returned. Whilst this may seem rudimentary, we can use it to determine if a discussion is positive or negative, guiding our further reading. Although modified, this code is based off of Daityari’s example on the Digital Ocean Community pages. For more information on coding specifics, I highly recommend you check their article out.

Outputs

Multiple CSVs are produced by the full code. One CSV lists information on posts, whereas the others outline the comments associated with each post.

Explanation of the post CSV

Explanation of the comments CSV(s)

Closing thoughts

Hopefully the ease with which Reddit posts can be downloaded, and their sentiment extracted is now apparent. The full code can be found on my GitHub account. Just remember that the code is not production ready and I can’t guarantee accuracy, etc.

There’s clearly room to hook a variation of this code up to a database, and perform more intensive NLP using a more appropriate training dataset under Tensorflow/PyTorch.